How to get rid of the psychological operation experiment of ASCII.jp Facebook

- 1223

- 98

How to get rid of the psychological operation experiment of ASCII.jp Facebook

- By huaweicomputers

- 28/05/2022

There is only one simple way, if you like.

A research team recently published a paper detailing how the emotions of 689003 fake book users were manipulated in an experiment conducted in 2012. The purpose of the experiment is to determine whether positive and negative posts affect the mood of users.

It does have an impact. According to whether the feed shows positive or negative posts, users have different reactions, and there are differences in posts.

On the other hand, the team also conducted an experiment on Facebook, both unconsciously and personally commissioned by the team. "will the announcement of this experiment arouse the anger of ordinary Facebook users, scholars and scientists?" it said. But judging from the later situation, the experimental results are, of course, completely "yes".

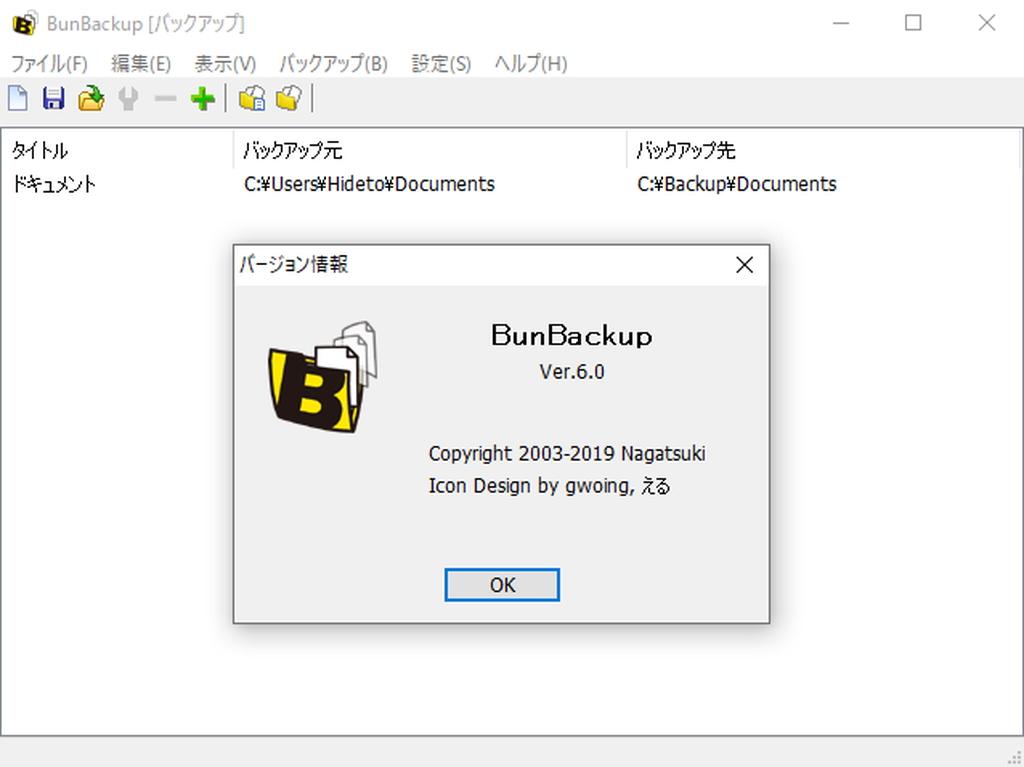

Facebook's experiment

In January 2012, the team spent a week conducting two experiments at the same time. In an experiment, they changed facebook's news feed algorithm and set a positive post reduction on the extracted user's timeline. In another experiment, there were fewer negative posts.

The paper entitled "Experimental evidence of large-scale emotional contagion in social networks" describes the following:

We got the following results from the large-scale experiment on facebook (Numb689003): emotional transmission to others, people can unconsciously experience common emotions. We can also obtain experimental evidence that even if there is no direct dialogue between people (with the communication of online emotional expression as a sufficient condition), there are no non-verbal signals, and emotions are transmitted only through online text.

The experiment was carried out by researchers at Cornell University and California State University in San Francisco and Adam Kramer, a Facebook data scientist.

Users can't know if they "participated" in the experiment. This is not only because facebook randomly selected participants, but also because users were not informed of the fact that they participated in scientific experiments. Generally speaking, by giving informed consent to the participants in a scientific experiment, they agree that they are regarded as experimental data and are aware of the timing of the experiment.

However, facebook did not seek the consent of the subjects before the experiment. Instead, the paper argues that if they are fake book users, they have the right to treat them as experimenters on the basis of the "policy on data use" that they must agree to when creating accounts.

However, the policy does not include a provision to allow users' feelings to be manipulated on scientific grounds. In fact, information is sometimes used for "internal applications such as troubleshooting, data analysis, testing, investigation, service improvement, etc." In addition, according to Kashmir Hill of Forbes magazine, by May 2012, the policy did not include the phrase "survey", and experiments on the question had been carried out months before that.

James Grimerman, a law professor at the University of Maryland, says the idea of installing users' consent to scientific experiments based on the company's rules of use of services is "Inkiki".

He said in an interview: "when we investigate, we give informed consent because it is important to be clear in language. (Facebook) does not publish any operations that display content. "

In order not to be the subject.

So if facebook claims that when he became a user, he agreed that his information was treated as scientific research material, is there any way to avoid it?

That's right, just stop Facebook.

Get off Facebook. Get your family off Facebook. If you work there,quit. They're fucking awful.

-Erin Kissane (@ kissane) Jun 28J 2014

"Erin Kissane: withdraw from Facebook. Let the family withdraw from the meeting. If you work at facebook, you should resign. This is a terrible company. "

Users cannot set Facebook to not use data to prevent other users from browsing profiles and images in their privacy settings. Just because people are users, in order to analyze human behavior, they give Facebook the authority to manipulate the news feed, and even their own feelings are manipulated.

Please consider that the experiment described in this paper was carried out two and a half years ago. In the meantime, what is facebook doing? Will you become a guinea pig in the Facebook experiment now and become clear in the future?

This is not impossible as long as there is a "policy on the use of data".

For Facebook, the user is just a data point

Of course, Facebook manipulates users' emotions, whether they realize it or not. In the problem experiment, the research team has been investigating the impact of users' mood on the content of the contribution, and how to get users to continue to use the service. The same thing is done in advertising.

Related articles: how to eliminate yourself from the Internet

Facebook is a free service, but the price of convenience is not money, but data. Using all the posts and all the data, Facebook can optimize the advertising campaign for users' personal behavior. Moreover, the data is no longer limited to facebook contributions, and all actions on the Internet have become the object of analysis.

Related article: Facebook begins to track user activity more closely

Mark Andriessen, director of Facebook, and some others pointed out that the "emotional contagion" experiment is no different from other advertising-related experiments being carried out by facebook.

Helpful hint:Whenever you watch TV,read a book,open a newspaper,or talk to another person,someone's manipulating your emotions!

-Marc Andreessen (@ pmarca) Jun 29 June 2014

"Marc Andreessen: for reference only. We are bound to be subjected to some kind of emotional manipulation in TV, books, newspapers and conversations. "

However, unlike the previous experiment, in the case of ads on facebook, we can confirm what is displayed. Advertisements appear on the screen one after another, and they just conform to our interests. We use services run by advertising fees in exchange for our own information. Moreover, there are not many ways to escape its goals.

Mr. Grimerman said. "if the terms of exchange are not shown to the other side, the negotiations will not be established. The exchange condition required by Facebook is the display of advertisements. Users know that the data collected by Facebook is used for advertising display purposes. "

After all, it can be said that the purpose of the experiment is to find a way for users to continue to use it even if they are uncomfortable on Facebook.

Data scientist Adam Kramer (Adam Craimer) posted the following post on Facebook:

We carried out this experiment because of Facebook's impact on people's emotions and its emphasis on users. We believe that it is important to investigate users' concerns about negative or alienated friends' positive posts. Similarly, I am also concerned about the problem that friends' negative posts will make me stay away from using Facebook (the author has added highlights).

For Facebook, there are many ways to conduct the same experiment without the disgust of so many people. For example, with regard to the experiment on the impact of social media on action, if facebook called on users to assist in participating, there were no ethical questions about positive and negative emotional manipulation.

In the previous article, Kramer apologized for "how to explain the research in the paper and any anxiety caused by it" and admitted: "We have obtained useful research results, but this anxiety may not be justified."

The study links academia to the science and technology culture of Silicon Valley. For years, Facebook's slogan is "move fast to drive things," but it has clearly shaken users' trust.

"there is a big gap between academia and Silicon Valley ideas," Grimerman said. It turns out that data usage practices in Silicon Valley are unethical. "

We are used to looking at corporate ads instead of handing over data on Facebook. Moreover, if facebook uses data for purposes not documented in the privacy policy, more than 1 billion of users will be used as potential subjects regardless of their will.

As long as facebook does not change this practice, there is only one way to prevent you from becoming a subject, and only one way to delete your Facebook account.

Top-level image provided by: Ludovic Toinel (by Flickr)

Selena Larson [original]

This report is reprinted from ReadWrite Japan. The source of the reprint is here.

ReadWrite Japan related articles

What is the ASCII Club?

Take a look at it.

Take a look at it.